Our Resources

From Siloed to Seamless: Vera Bradley Optimizes Design Collaboration with VibeIQ

Join our webinar to discover how Vera Bradley accelerated design processes with VibeIQ. Hear from experts Kelli Thornson and Kat Kovac of Vera Bradley and Pam Buckingham of VibeIQ as they share insights on transforming Vera Bradley’s design operations. Quach Hai, our VP of Customer Success at VibeIQ, will serve as the moderator for the upcoming interactive session.

Navigating the Fashion Tech New Era: Tradition to Innovation Journey!

In an industry blending tradition with the latest tech, my journey in fashion technology has been quite a ride. Growing

VibeIQ Modernizes How Companies Bring Products to Market

The company launches a new website to engage with merchandisers, designers, and sales teams. March 13, 2024 – Today, VibeIQ,

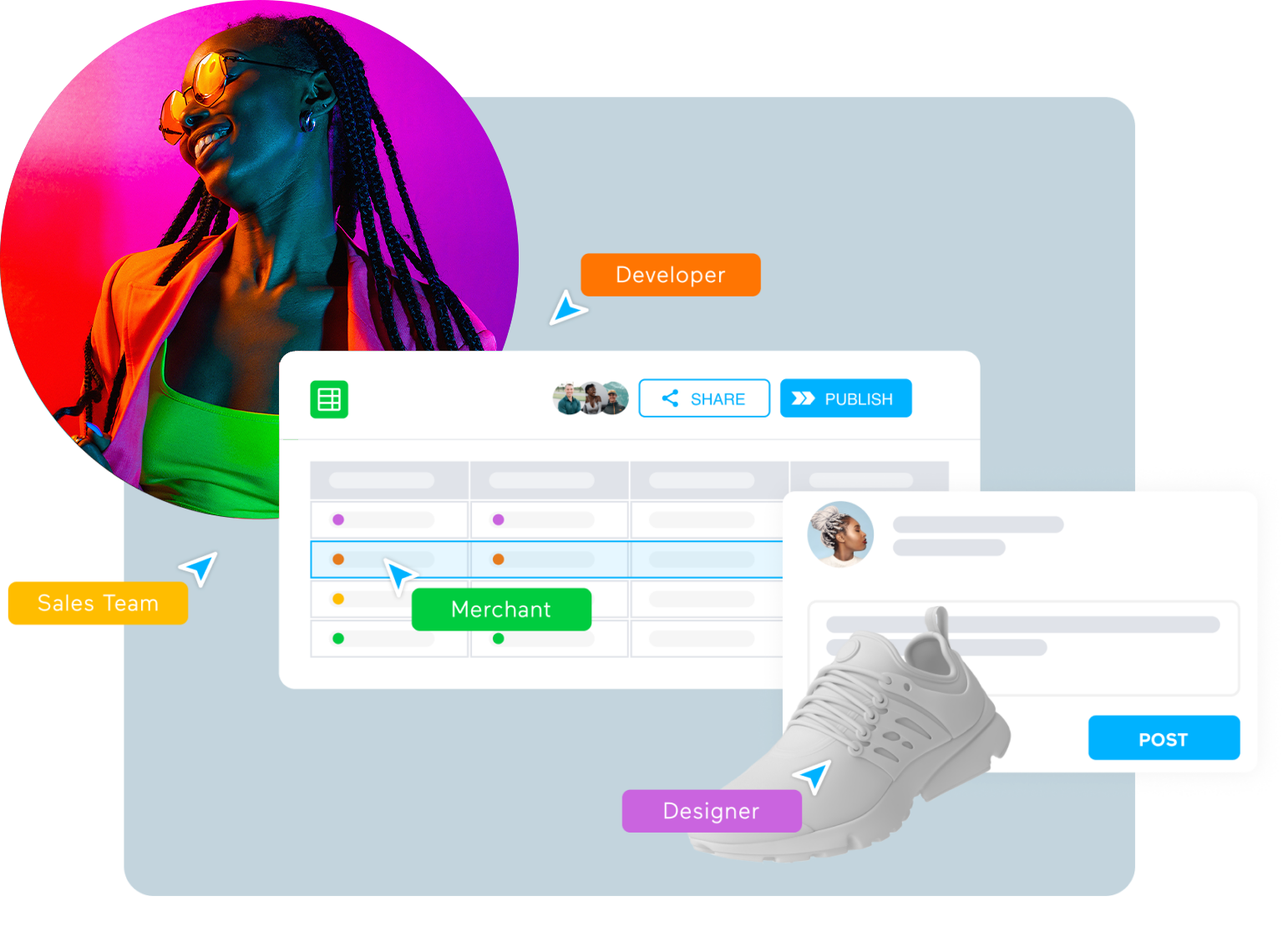

On-Demand Webinar: VibeIQ and VNTANA

Break down the silos in your organization. Learn to leverage 3D models, 2D sketches and product data across Line Planning, Assortment Planning, Sell-in and Sell-Through.

Digital Transformation in Fashion Retail: VibeIQ and VNTANA’s 3D Asset Solutions Seminar

In the fast-paced world of fashion retail, digital transformation is not just a trend; it’s a necessity. Our recent webinar

PI Apparel Merchandising 2023

October 23rd – 24th, 2023 VibeIQ is a proud sponsor of PI Apparel Merchandising. Join us on October 23-24. We

VibeIQ featured in The Interline’s 2023 PLM Report

This article was first published in The Interline’s PLM Report 2023, as part of a collection of perspectives on the current